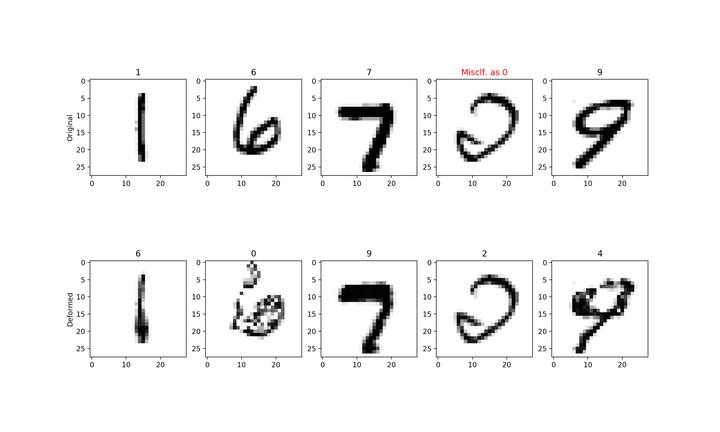

Adversarial deformations for ODE-net. First row: Original images from the MNIST test set. Second row: The deformed images. See thesis for details.

Adversarial deformations for ODE-net. First row: Original images from the MNIST test set. Second row: The deformed images. See thesis for details.Abstract

We present a concise optimal control optimization approach to continuous-depth deep learning models by discussing ideas and algorithms derived from the optimality conditions of the powerful Pontryagin’s Maximum Principle. The new emerging field of constant memory cost models, however, is vulnerable to adversarial attacks. Apart from highlighting the inconsistency of neural networks theoretically, we experiment with adversarial deformations for neural ordinary differential equations on MNIST and compare our results to convolutional neural-network based architectures.

Type